References

1. [CogVLM2-video] Hong, Wenyi and Wang, Weihan and Ding, Ming and Yu, Wenmeng and Lv, Qingsong and Wang,

Yan and Cheng, Yean and Huang, Shiyu and Ji, Junhui and Xue, Zhao and others

CogVLM2: Visual Language Models for Image and Video Understanding

arXiv preprint arXiv:2408.16500, 2024.

2. [GPT-4] Achiam, Josh and Adler, Steven and Agarwal, Sandhini and Ahmad, Lama and Akkaya, Ilge and Aleman,

Florencia Leoni and Almeida, Diogo and Altenschmidt, Janko and Altman, Sam and Anadkat, Shyamal and others.

Gpt-4 technical report

arXiv preprint arXiv:2303.08774, 2023.

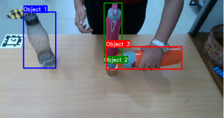

3. [Grounding DINO 1.5 Pro] Tianhe Ren and Qing Jiang and Shilong Liu and Zhaoyang Zeng and Wenlong Liu

and Han Gao and Hongjie Huang and Zhengyu Ma and Xiaoke Jiang and Yihao Chen and Yuda Xiong and Hao Zhang

and Feng Li and Peijun Tang and Kent Yu and Lei Zhang.

Grounding DINO 1.5: Advance the "Edge" of Open-Set Object Detection

arXiv preprint arXiv:2405.10300v2, 2024.

4. [SAM 2] Ravi, Nikhila and Gabeur, Valentin and Hu, Yuan-Ting and Hu, Ronghang and Ryali, Chaitanya and

Ma, Tengyu and Khedr, Haitham and R{\"a}dle, Roman and Rolland, Chloe and Gustafson, Laura and Mintun,

Eric and Pan, Junting and Alwala, Kalyan Vasudev and Carion, Nicolas and Wu, Chao-Yuan and Girshick,

Ross and Doll{\'a}r, Piotr and Feichtenhofer, Christoph

SAM 2: Segment Anything in Images and Videos

arXiv preprint arXiv:2408.00714, 2024.